本文分析说明Zephyr网络层offload的使用和运行调用机制。

在Zephyr网络传输Offloading–概览一文中提到了Zephyr网络传输offloading支持socket offloading API, 本文就如何将第三方TCP/IP协议栈以offloading的方式添加到zephyr中和Zephyr的调用机制进行说明。

使用

net offload的实现被放到drv中,然后在编译期进行初始化函数注册,静态的保留在net iface的init section中,运行时进行初始化注册iface API后,应用层使用时就可以调用到drv中实现的net offload.

1.配置

配置CONFIG_NET_OFFLOAD=y,编译时会启用网络层offload

2.实现net offload API

按照net_offload.h中struct struct net_offload的定义使用第三方TCP/IP API实现zephyr的net_offload抽象1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87struct net_offload {

/**

* This function is called when the socket is to be opened.

*/

int (*get)(sa_family_t family,

enum net_sock_type type,

enum net_ip_protocol ip_proto,

struct net_context **context);

/**

* This function is called when user wants to bind to local IP address.

*/

int (*bind)(struct net_context *context,

const struct sockaddr *addr,

socklen_t addrlen);

/**

* This function is called when user wants to mark the socket

* to be a listening one.

*/

int (*listen)(struct net_context *context, int backlog);

/**

* This function is called when user wants to create a connection

* to a peer host.

*/

int (*connect)(struct net_context *context,

const struct sockaddr *addr,

socklen_t addrlen,

net_context_connect_cb_t cb,

int32_t timeout,

void *user_data);

/**

* This function is called when user wants to accept a connection

* being established.

*/

int (*accept)(struct net_context *context,

net_tcp_accept_cb_t cb,

int32_t timeout,

void *user_data);

/**

* This function is called when user wants to send data to peer host.

*/

int (*send)(struct net_pkt *pkt,

net_context_send_cb_t cb,

int32_t timeout,

void *user_data);

/**

* This function is called when user wants to send data to peer host.

*/

int (*sendto)(struct net_pkt *pkt,

const struct sockaddr *dst_addr,

socklen_t addrlen,

net_context_send_cb_t cb,

int32_t timeout,

void *user_data);

/**

* This function is called when user wants to receive data from peer

* host.

*/

int (*recv)(struct net_context *context,

net_context_recv_cb_t cb,

int32_t timeout,

void *user_data);

/**

* This function is called when user wants to close the socket.

*/

int (*put)(struct net_context *context);

};

//vender开头的网络层函数,需要使用第三方TCP/IP来实现

static struct net_offload vender_offload = {

.get = vender_get,

.bind = vender_bind,

.listen = vender_listen,

.connect = vender_connect,

.accept = vender_accept,

.send = vender_send,

.sendto = vender_sendto,

.recv = vender_recv,

.put = vender_put,

};

3.实现net offload 初始化函数

net offload初始化函数完成将vender_offload注册到iface内1

2

3

4

5

6

7int vender_offload_init(struct net_if *iface)

{

//将实现的net offload 函数注册到iface内

iface->if_dev->offload = &vender_offload;

return 0;

}

4.注册设备

使用宏NET_DEVICE_OFFLOAD_INIT进行offload设备注册1

2

3

4

5

6

7

8

9

10

11

12struct net_if_api {

void (*init)(struct net_if *iface);

};

static const struct net_if_api vender_iface_api = {

.init = vender_iface_init

}

NET_DEVICE_OFFLOAD_INIT(vender_net, CONFIG_NET_VENDER_NAME,

vender_init, device_pm_control_nop, &vender_driver_data, NULL,

CONFIG_NET_VENDER_INIT_PRIORITY, &vender_iface_api,

VENDER_MTU);

之后上层调用net_offload.h中的net_offload_ API就对应的调用到了vender_offload内的vender_ API

实现

本小节分析net_offload_ API是如何调用到对应的vender_ API

编译时注册

Z_DEVICE_DEFINE注册了vender_init的初始化函数是驱动级的,我们不展开分析,只分析net offload一路。

NET_DEVICE_OFFLOAD_INIT->Z_NET_DEVICE_OFFLOAD_INIT被展开为两个宏Z_DEVICE_DEFINE,NET_IF_OFFLOAD_INIT,Z_DEVICE_DEFINE注册了vender_init的初始化函数是驱动级的,我们不展开分析,只分析net offload一路。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21#define Z_NET_DEVICE_OFFLOAD_INIT(node_id, dev_name, drv_name, init_fn, \

pm_control_fn, data, cfg, prio, \

api, mtu) \

Z_DEVICE_DEFINE(node_id, dev_name, drv_name, init_fn, \

pm_control_fn, data, cfg, POST_KERNEL, prio, api);\

NET_IF_OFFLOAD_INIT(dev_name, 0, mtu)

#define NET_IF_OFFLOAD_INIT(dev_name, sfx, _mtu) \

static Z_STRUCT_SECTION_ITERABLE(net_if_dev, \

NET_IF_DEV_GET_NAME(dev_name, sfx)) = { \

.dev = &(DEVICE_NAME_GET(dev_name)), \

.mtu = _mtu, \

}; \

static Z_DECL_ALIGN(struct net_if) \

NET_IF_GET_NAME(dev_name, sfx)[NET_IF_MAX_CONFIGS] \

__used __in_section(_net_if, static, net_if) = { \

[0 ... (NET_IF_MAX_CONFIGS - 1)] = { \

.if_dev = &(NET_IF_DEV_GET_NAME(dev_name, sfx)), \

NET_IF_CONFIG_INIT \

} \

}

这里dev_name是CONFIG_NET_VENDER_NAME,假设我们配置为”vender”, sfx为0,_mtu是VENDER_MTU,假设配置为2048,对NET_IF_OFFLOAD_INIT进行展开为1

2

3

4

5

6

7

8

9

10static struct net_if_dev __net_if_dev_vender_0 __attribute__((section(._net_if_dev.static.__net_if_dev_vender_0))={

.dev = &_device_vender,

.mtu = 2048,

};

static struct net_if __net_if_vender_0[NET_IF_MAX_CONFIGS] __attribute__((section(._net_if.static._net_if))= { \

[0 ... (NET_IF_MAX_CONFIGS - 1)] = {

.if_dev = &__net_if_dev_vender_0,

NET_IF_CONFIG_INIT

}

}

Z_DEVICE_DEFINE在zephyr驱动模型一文中DEVICE_DEFINE已经说明过,这里不做详细展开,只要知道这里展开的形式如下即可:1

2

3

4

5const struct device _device_vender = {

...

.api = &vender_iface_api,

...

}

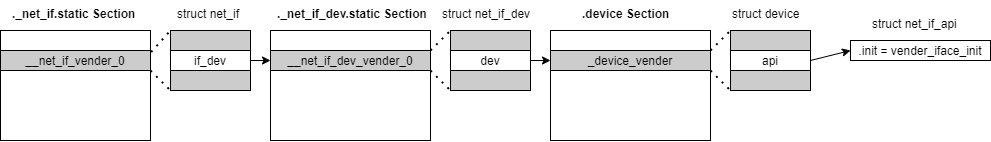

总结上面的放置和指向关系如下图:

运行时初始化

运行时初始化调用关系如下:

Net_core.c (subsys\net\ip):net_init->init_rx_queues->Net_if.c (subsys\net\ip): net_if_init1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38void net_if_init(void)

{

int if_count = 0;

NET_DBG("");

net_tc_tx_init();

//变量_net_if section内的iface,并进行初始化,这里就会遍历到__net_if_vender_0

Z_STRUCT_SECTION_FOREACH(net_if, iface) {

init_iface(iface);

if_count++;

}

....

}

//从__net_if_vender_0找到__net_if_dev_vender_0,再找到_device_vender

static inline const struct device *net_if_get_device(struct net_if *iface)

{

return iface->if_dev->dev;

}

static inline void init_iface(struct net_if *iface)

{

//_device_vender的api就是&vender_iface_api

const struct net_if_api *api = net_if_get_device(iface)->api;

if (!api || !api->init) {

NET_ERR("Iface %p driver API init NULL", iface);

return;

}

NET_DBG("On iface %p", iface);

//这里就是执行vender_iface_api内的vender_iface_init

//也就是iface->if_dev->offload = &vender_offload; 将__net_if_vender_0->__net_if_dev_vender_0->offload 指向到vender_offload

api->init(iface);

}

net offload函数的调用流程

前面提到过上层调用net_offload.h中的net_offload_ API就对应的调用到了vender_offload内的vender_ API,这里以net_offload_send为例来看1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26static inline int net_offload_send(struct net_if *iface,

struct net_pkt *pkt,

net_context_send_cb_t cb,

k_timeout_t timeout,

void *user_data)

{

NET_ASSERT(iface); //判断iface是否为空

NET_ASSERT(net_if_offload(iface)); //判断iface是否支持offload

NET_ASSERT(net_if_offload(iface)->send); //判断ifcace的offload是否支持send

//执行,这里其实调用的就是vender_offload内的vender_send

return net_if_offload(iface)->send(

pkt, cb,

timeout_to_int32(timeout),

user_data);

}

//如果有注册并初始化这里offload就是vender_offload

static inline struct net_offload *net_if_offload(struct net_if *iface)

{

#if defined(CONFIG_NET_OFFLOAD)

return iface->if_dev->offload;

#else

return NULL;

#endif

}

net offload与TCP/IP的对接

Zephyr的TCP/IP对上的接口都封装在net_context内,net_context_ API都有用net_offload_ API实现,在使用offload的情况下,net_offload的API会被编译到net_context内,以recv为例1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22int net_context_recv(struct net_context *context,

net_context_recv_cb_t cb,

k_timeout_t timeout,

void *user_data)

{

k_mutex_lock(&context->lock, K_FOREVER);

if (IS_ENABLED(CONFIG_NET_OFFLOAD) &&

net_if_is_ip_offloaded(net_context_get_iface(context))) {

ret = net_offload_recv(

net_context_get_iface(context),

context, cb, timeout, user_data);

//使用了offload就直接退出

goto unlock;

}

//这里省略是Zephyr原生的TCP/IP使用flow

....

unlock:

k_mutex_unlock(&context->lock);

}

虽然不同的API有一定的差异,但都是和net_offload_ API是对应的,详细可以在net_context.c中搜索CONFIG_NET_OFFLOAD,看每个API不同的实现。

另外需要说明的是,net offload是在TCP/IP级别,没有对ICMP进行offload,因此无法支持icmp,也就没有办法实现ping. 在icmpv4.c中也可以看到offload下会直接返回错误1

2

3

4

5

6

7

8

9

10

11

12

13int net_icmpv4_send_echo_request(struct net_if *iface,

struct in_addr *dst,

uint16_t identifier,

uint16_t sequence,

const void *data,

size_t data_size)

{

if (IS_ENABLED(CONFIG_NET_OFFLOAD) && net_if_is_ip_offloaded(iface)) {

return -ENOTSUP;

}

....

}