本文简要描述zephyr在cortex-m下如何启动第一个thread和进行上下文切换。

原本没有打算分析zephyr的OS内核,但上周笔记本坏了,只能在台式机上看代码,因此大致看了一下上下文切换和调度的内容。现在买了新笔记本为了不辜负几个小时的读码时间,把看代码的心得也写了下来,果然是写文档的时间远远超过理解代码的时间。

本文基于nrf52832(arm cortex-m4)进行分析。上下文切换和芯片架构强相关,不同的芯片架构,寄存器,堆栈方式,中断使用都不一样,因此本文仅仅对cortex-m系列有较大参考价值。

启动第一个thread

在Zephyr如何运行到main一文中已经有提到zephyr的main函数是在main thread中被调用的,这里再详细分析zephyr如何切到main thread执行的。

编译配置项

相关代码基于下面定义进行分析1

2

3

4

5

6

7#define CONFIG_MULTITHREADING 1

#define CONFIG_ARCH_HAS_CUSTOM_SWAP_TO_MAIN 1

#define CONFIG_THREAD_STACK_INFO 1

#define CONFIG_ARCH_HAS_CUSTOM_SWAP_TO_MAIN 1

#define CONFIG_SCHED_DUMB 1

#define CONFIG_TIMESLICING 1

#define

流程

kernel/init.c 的_Cstart函数主要完成thread环境准备,并且到第一个thread执行1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23static struct k_thread _main_thread_s;

static struct k_thread _idle_thread_s;

k_tid_t const _main_thread = (k_tid_t)&_main_thread_s;

k_tid_t const _idle_thread = (k_tid_t)&_idle_thread_s;

FUNC_NORETURN void _Cstart(void)

{

struct k_thread *dummy_thread = NULL;

_IntLibInit(); //中断初始化

kernel_arch_init(); //于架构相关的初始化,主要是设置CPU的寄存器

//不依赖内核的驱动初始化

_sys_device_do_config_level(_SYS_INIT_LEVEL_PRE_KERNEL_1);

_sys_device_do_config_level(_SYS_INIT_LEVEL_PRE_KERNEL_2);

//初始化多thread环境,并创建main thread

prepare_multithreading(dummy_thread);

//切换到main thread

switch_to_main_thread();

}

创建Main thread

通过_setup_new_thread将bg_thread_main作为thread进行创建1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29static void prepare_multithreading(struct k_thread *dummy_thread)

{

ARG_UNUSED(dummy_thread);

dummy_thread->stack_info.start = 0;

dummy_thread->stack_info.size = 0;

//内核调度相关初始化

_sched_init();

//将main thread放入ready q

_ready_q.cache = _main_thread;

//创建main thread

_setup_new_thread(_main_thread, _main_stack,

MAIN_STACK_SIZE, bg_thread_main,

NULL, NULL, NULL,

CONFIG_MAIN_THREAD_PRIORITY, K_ESSENTIAL, "main");

//将main thread标记为start & ready

_mark_thread_as_started(_main_thread);

_ready_thread(_main_thread);

//创建idle thread

init_idle_thread(_idle_thread, _idle_stack);

_kernel.cpus[0].idle_thread = _idle_thread;

initialize_timeouts();

}

_setup_new_thread调用_new_thread创建main thread,主要就是对thread堆栈进行初始化,为上下文切换做准备1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69void _new_thread(struct k_thread *thread, k_thread_stack_t *stack,

size_t stackSize, k_thread_entry_t pEntry,

void *parameter1, void *parameter2, void *parameter3,

int priority, unsigned int options)

{

//获取堆栈地址

char *pStackMem = K_THREAD_STACK_BUFFER(stack);

_ASSERT_VALID_PRIO(priority, pEntry);

struct __esf *pInitCtx;

//对thread管理结构体进行初始化,在struct k_thread中保存main thread的优先级,堆栈信息,状态等

_new_thread_init(thread, pStackMem, stackEnd - pStackMem, priority,

options);

/* carve the thread entry struct from the "base" of the stack */

//在堆栈的底部准备上下文信息

pInitCtx = (struct __esf *)(STACK_ROUND_DOWN(stackEnd -

sizeof(struct __esf)));

//PC为

pInitCtx->pc = (u32_t)_thread_entry; //将_thread_entry放入堆栈中PC所在位置,上下文切换时_thread_entry为被pop到PC执行,在_thread_entry内调用main thread的入口函数

/* force ARM mode by clearing LSB of address */

pInitCtx->pc &= 0xfffffffe;

//将Main thread入口函数和入口函数的3个参数做为_thread_entry的4个参数放入堆栈中a1~a4位置,上下文切换时这四个参数会被pop到r0~r3作为thread_entry的参数

pInitCtx->a1 = (u32_t)pEntry;

pInitCtx->a2 = (u32_t)parameter1;

pInitCtx->a3 = (u32_t)parameter2;

pInitCtx->a4 = (u32_t)parameter3;

pInitCtx->xpsr =

0x01000000UL; /* clear all, thumb bit is 1, even if RO */

thread->callee_saved.psp = (u32_t)pInitCtx;

thread->arch.basepri = 0;

}

FUNC_NORETURN void _thread_entry(k_thread_entry_t entry,

void *p1, void *p2, void *p3)

{

entry(p1, p2, p3);

k_thread_abort(k_current_get());

CODE_UNREACHABLE;

}

struct __esf {

sys_define_gpr_with_alias(a1, r0);

sys_define_gpr_with_alias(a2, r1);

sys_define_gpr_with_alias(a3, r2);

sys_define_gpr_with_alias(a4, r3);

sys_define_gpr_with_alias(ip, r12);

sys_define_gpr_with_alias(lr, r14);

sys_define_gpr_with_alias(pc, r15);

u32_t xpsr;

};

struct _callee_saved {

u32_t v1; /* r4 */

u32_t v2; /* r5 */

u32_t v3; /* r6 */

u32_t v4; /* r7 */

u32_t v5; /* r8 */

u32_t v6; /* r9 */

u32_t v7; /* r10 */

u32_t v8; /* r11 */

u32_t psp; /* r13 */

};

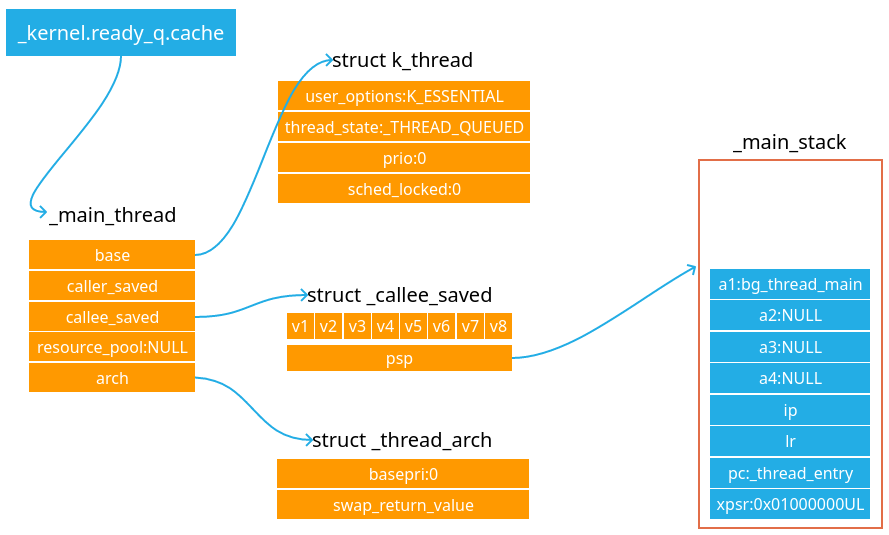

经过上述步骤thread&stack如下:

说明: 对于Cortex-M内核,中断发生时硬件会自动保存r0~r3,ip,lr,pc,xpsr因此_caller_saved不使用也无需初始化。一般情況下对于刚创建的thread来说这些值需要软件到堆栈也就是struct __esf,当Swap第一次调度时会从这里pop出开始执行。但对于一些架构(本文分析的cortex-m)无法直接通过swap进行第一个thread首次切换,就需要配置CONFIG_ARCH_HAS_CUSTOM_SWAP_TO_MAIN,进行第一个thread首次切换。

切到Main thread运行

switch_to_main_thread->_arch_switch_to_main_thread

配置有CONFIG_ARCH_HAS_CUSTOM_SWAP_TO_MAIN的首次切换如下,可以看到是直接将堆栈地址放到PSP,然后bx跳到_thread_entry执行1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39static ALWAYS_INLINE void

_arch_switch_to_main_thread(struct k_thread *main_thread,

k_thread_stack_t *main_stack,

size_t main_stack_size, k_thread_entry_t _main)

{

/* get high address of the stack, i.e. its start (stack grows down) */

char *start_of_main_stack;

//计算main thread堆栈地址

start_of_main_stack =

K_THREAD_STACK_BUFFER(main_stack) + main_stack_size -

MPU_GUARD_ALIGN_AND_SIZE;

start_of_main_stack = (void *)STACK_ROUND_DOWN(start_of_main_stack);

_current = main_thread;

__asm__ __volatile__(

/* move to main() thread stack */

"msr PSP, %0 \t\n" //psp指向main thread stack

/* unlock interrupts */

"cpsie if \t\n"

"movs %%r1, #0 \n\t"

"msr BASEPRI, %%r1 \n\t"

/* branch to _thread_entry(_main, 0, 0, 0) * /

"mov %%r0, %1 \n\t" //设置第一个参数为main thread入口函数

"bx %2 \t\n" //调用_thread_entry,正式进入main thread运行

:

: "r"(start_of_main_stack),

"r"(_main), "r"(_thread_entry),

"r"(main_thread)

: "r0", "r1", "sp"

);

CODE_UNREACHABL

上下文切换

第一个thread启动后,又可以继续创建其它thread,然后通过上下文切换让其它thread被调度运行

上下文切换方式

上下文切换方式有两种

- thread中主动让CPU PendSV,发生调度

- IRQ退出时,重新调度

下面分别说明

PendSV

PendSV上下文切换大体也分两类:

- 所有内核组件启动,等待,释放时会发生上下文切换,可以搜索_reschedule查看具体在那些地方。

- Thread被sleep,yield,suspend,abort时会发生上下文切换。可搜索_Swap查看具体在那些地方。

_reschedule其实也是调用_Swap来完成, 最终是通过写ICSR进入PendSV来完成上下文切换1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23static inline int _Swap(unsigned int key)

{

int ret;

_check_stack_sentinel();

ret = __swap(key);

return ret;

}

int __swap(int key)

{

/* store off key and return value */

_current->arch.basepri = key;

_current->arch.swap_return_value = _k_neg_eagain;

/* set pending bit to make sure we will take a PendSV exception */

SCB->ICSR |= SCB_ICSR_PENDSVSET_Msk;

/* clear mask or enable all irqs to take a pendsv */

irq_unlock(0);

return _current->arch.swap_return_value;

}

__swap触发pendsv后进入pendsv执行代码在arch/arm/core/swap_helper.S,简化如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68SECTION_FUNC(TEXT, __pendsv)

/* protect the kernel state while we play with the thread lists */

movs.n r0, #_EXC_IRQ_DEFAULT_PRIO

msr BASEPRI, r0

/* load _kernel into r1 and current k_thread into r2 */

//获取当前正在运行thread的_kernel->cpus[0].current 地址放入到r2中

ldr r1, =_kernel

ldr r2, [r1, #_kernel_offset_to_current]

/* addr of callee-saved regs in thread in r0 */

//将当前thread的_kernel->cpus[0].current->callee_saved地址放入r0

ldr r0, =_thread_offset_to_callee_saved

add r0, r2

/* save callee-saved + psp in thread */

//将寄存器v1~v8,sp保存在当前thread的callee_saved中(_kernel->cpus[0].current->callee_saved)

mrs ip, PSP

stmia r0, {v1-v8, ip}

/*

* Prepare to clear PendSV with interrupts unlocked, but

* don't clear it yet. PendSV must not be cleared until

* the new thread is context-switched in since all decisions

* to pend PendSV have been taken with the current kernel

* state and this is what we're handling currently.

*/

ldr v4, =_SCS_ICSR

ldr v3, =_SCS_ICSR_UNPENDSV

/* _kernel is still in r1 */

/* fetch the thread to run from the ready queue cache */

//取出将要执行的thread,_kernel->ready_q放到r2中

ldr r2, [r1, _kernel_offset_to_ready_q_cache]

//让_kernel->cpus[0].current指向将要执行的thread(在r2中)

str r2, [r1, #_kernel_offset_to_current]

/*

* Clear PendSV so that if another interrupt comes in and

* decides, with the new kernel state baseed on the new thread

* being context-switched in, that it needs to reschedules, it

* will take, but that previously pended PendSVs do not take,

* since they were based on the previous kernel state and this

* has been handled.

*/

/* _SCS_ICSR is still in v4 and _SCS_ICSR_UNPENDSV in v3 */

str v3, [v4, #0]

/* Restore previous interrupt disable state (irq_lock key) */

ldr r0, [r2, #_thread_offset_to_basepri]

movs.n r3, #0

str r3, [r2, #_thread_offset_to_basepri]

/* restore BASEPRI for the incoming thread */

msr BASEPRI, r0

/* load callee-saved + psp from thread */

//获取将要执行thread的callee_saved,并将其放入v1-v8和sp寄存器中,到此sp指针已经指向新的thread stack

add r0, r2, #_thread_offset_to_callee_saved

ldmia r0, {v1-v8, ip}

msr PSP, ip

/* exc return */

//退出中断处理,退出时硬件会将新thread stack中保存的r0~r3,ip,lr,pc,xpsr弹出,从而恢复新thread上下文开始执行

bx lr

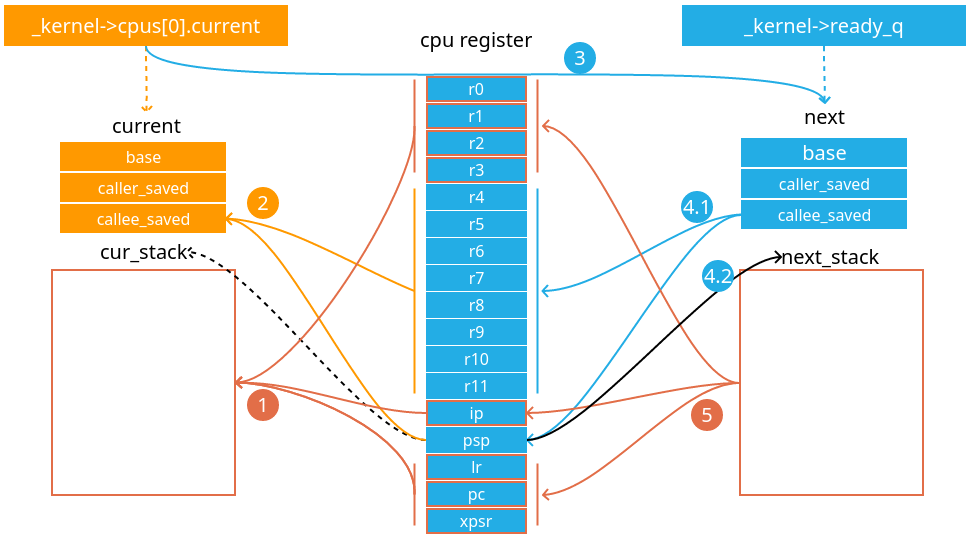

以上流程如下图:

- 中断pendsv发生,硬件入栈r0~r3,ip,lr,pc,xpsr

- 软件保存r4~r11,psp到当前thread的callee_saved

- 改变当前thread指针指向将要执行的thread

- 软件从将要执行的thread弹出callee_saved到r4~r11,psp,堆栈指针指向将要执行的thread

- 退出中断pendsv,硬件自动从将要执行的thread stack中弹出r0~r3,ip,lr,pc

IRQ

在IRQ响应时执行_isr_wrapper,退出时呼叫_IntExit,该函数会进行上下文切换arch/arm/core/exc_exit.S

如果配置了CONFIG_TIMESLICING,会在每个时间片进行调度检查1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22SECTION_SUBSEC_FUNC(TEXT, _HandlerModeExit, _IntExit)

SECTION_SUBSEC_FUNC(TEXT, _HandlerModeExit, _ExcExit)

ldr r0, =_kernel

// r1放置当前thread

ldr r1, [r0, #_kernel_offset_to_current]

// r0放置将要调度的thread

ldr r0, [r0, _kernel_offset_to_ready_q_cache]

//比较二者不一致,进行调度

cmp r0, r1

beq _EXIT_EXC

/* context switch required, pend the PendSV exception */

// 需要调度,启动pendsv,进入pendsv中断执行上下文切换

ldr r1, =_SCS_ICSR

ldr r2, =_SCS_ICSR_PENDSV

str r2, [r1]

_ExcExitWithGdbStub:

_EXIT_EXC:

pop {lr}

bx lr

调度简述

本文不做调度分析,就目前用到的调度进行简单说明

优先级调度

zephyr os优先级调度支持下面三种调度方式

- CONFIG_SCHED_DUMB: 使用thread比较少的情况,不使用红黑树,可以节约2k的code size

- CONFIG_SCHED_SCALABLE:适用于thread比较多(>20),将适用红黑树

- CONFIG_SCHED_MULTIQ:1.12版本前默认的调度方式

调度算法主要体现在:

_priq_run_add/_priq_run_remove/_priq_run_best

时间片

相同优先级之间如果不支持时间片,就需要主动释放资源才能让其它thread运行,配置为启动时间片,在nrf52832下是使用的rtc1做为时间片定时中断

初始化中断

drivers/timer/sys_clock_init.c1

2SYS_DEVICE_DEFINE("sys_clock", z_clock_driver_init, z_clock_device_ctrl,

PRE_KERNEL_2, CONFIG_SYSTEM_CLOCK_INIT_PRIORITY);

drivers/timer/nrf_rtc_timer.c1

2int z_clock_driver_init(struct device *device)

{

NVIC_ClearPendingIRQ(NRF5_IRQ_RTC1_IRQn);

IRQ_CONNECT(NRF5_IRQ_RTC1_IRQn, 1, rtc1_nrf5_isr, 0, 0);

irq_enable(NRF5_IRQ_RTC1_IRQn);

1

}

中断向量表

软件中断向量表,会在IRQ发生时被IRQ中断软件查询使用

tests/kernel/arm_irq_vector_table/src/arm_irq_vector_table.c1

2

3

4

5

6

7void rtc1_nrf5_isr(void);

typedef void (*vth)(void); /* Vector Table Handler */

vth __irq_vector_table _irq_vector_table[RTC1_IRQn + 1] = {

isr0, isr1, isr2,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

rtc1_nrf5_isr

};

上面的_irq_vector_table会被arch/common/gen_isr_tables.py转换为_sw_isr_table

中断函数

arch/arm/core/isr_wrapper.S 响应中断访问软件中断向量表1

2

3

4

5

6

7

8

9SECTION_FUNC(TEXT, _isr_wrapper)

ldr r1, =_sw_isr_table

add r1, r1, r0 /* table entry: ISRs must have their MSB set to stay

* in thumb mode */

ldm r1!,{r0,r3} /* arg in r0, ISR in r3 */

blx r3 /* call ISR */

b _IntExit

当发生RTC中断时会查询出rtc1_nrf5_isr进行执行,按照下面顺序调用

rtc1_nrf5_isr->rtc_announce_set_next->z_clock_announce->z_time_slice

在z_time_slice进行时间片的计算,并出下一个要执行的thread1

2

3

4

5

6

7

8

9

10

11void z_time_slice(int ticks)

{

if (slice_time && sliceable(_current)) {

if (ticks >= _current_cpu->slice_ticks) {

_move_thread_to_end_of_prio_q(_current);

reset_time_slice();

} else {

_current_cpu->slice_ticks -= ticks; //当前thread时间片扣除

}

}

}

当时间片用完后还是通过_priq_run_remove/_priq_run_remove来计算出将要调度的thread1

2

3

4

5

6

7

8

9void _move_thread_to_end_of_prio_q(struct k_thread *thread)

{

LOCKED(&sched_lock) {

_priq_run_remove(&_kernel.ready_q.runq, thread);

_priq_run_remove(&_kernel.ready_q.runq, thread);

_mark_thread_as_queued(thread);

update_cache(thread == _current);

}

}

最后IRQ退出,使用_IntExit来进行上下文切换,切换到新调度的thread中运行。

参考

https://docs.zephyrproject.org/latest/kernel/threads/scheduling.html